Scientists have known for some time that a protein called presenilin plays a role in Alzheimer's disease, and a new study reveals one intriguing way this happens.

It has to do with how materials travel up and down brain cells, which are also called neurons.

In an Oct. 8 paper in Human Molecular Genetics, University at Buffalo researchers report that presenilin works with an enzyme called GSK-3ß to control how fast materials -- like proteins needed for cell survival -- move through the cells.

"If you have too much presenilin or too little, it disrupts the activity of GSK-3ß, and the transport of cargo along neurons becomes uncoordinated," says lead researcher Shermali Gunawardena, PhD, an assistant professor of biological sciences at UB. "This can lead to dangerous blockages."

More than 150 mutations of presenilin have been found in Alzheimer's patients, and scientists have previously shown that the protein, when defective, can cause neuronal blockages by snipping another protein into pieces that accumulate in brain cells.

But this well-known mechanism isn't the only way presenilin fuels disease, as Gunawardena's new study shows.

"Our work elucidates how problems with presenilin could contribute to early problems observed in Alzheimer's disease," she says. "It highlights a potential pathway for early intervention through drugs -- prior to neuronal loss and clinical manifestations of disease."

The study suggests that presenilin activates GSK-3ß. This is an important finding because the enzyme helps control the speed at which tiny, organic bubbles called vesicles ferry cargo along neuronal highways. (You can think of vesicles as trucks, each powered by little molecular motors called dyneins and kinesins.)

When researchers lowered the amount of presenilin in the neurons of fruit fly larvae, less GSK-3ß became activated and vesicles began speeding along cells in an uncontrolled manner.

Decreasing levels of both presenilin and GSK-3ß at once made things worse, resulting in "traffic jams" as the bubbles got stuck in neurons.

"Both GSK-3ß and presenilin have been shown to be involved in Alzheimer's disease, but how they are involved has not always been clear," Gunawardena says. "Our research provides new insight into this question."

Gunawardena proposes that GSK-3ß -- short for glycogen synthase kinase-3beta -- acts as an "on switch" for dynein and kynesin motors, telling them when to latch onto vesicles.

Dyneins carry vesicles toward the cell nucleus, while kinesins move in the other direction, toward the periphery of the cell. When all is well and GSK-3ß levels are normal, both types of motors bind to vesicles in carefully calibrated numbers, resulting in smooth traffic flow along neurons.

That's why it's so dangerous when GSK-3ß levels are off-kilter, she says.

When GSK-3ß levels are high, too many motors attach to the vesicles, leading to slow movement as motor activity loses coordination. Low GSK-3ß levels appear to have the opposite effect, causing fast, uncontrolled movement as too few motors latch onto vesicles.

Nurturing May Protect Kids from Brain Changes Linked to Poverty!

Posted Under:

Growing up in poverty can have long-lasting, negative consequences for a child. But for poor children raised by parents who lack nurturing skills, the effects may be particularly worrisome, according to a new study at Washington University School of Medicine in St. Louis.

Among children living in poverty, the researchers identified changes in the brain that can lead to lifelong problems like depression, learning difficulties and limitations in the ability to cope with stress. The study showed that the extent of those changes was influenced strongly by whether parents were nurturing.

The good news, according to the researchers, is that a nurturing home life may offset some of the negative changes in brain anatomy among poor children. And the findings suggest that teaching nurturing skills to parents -- particularly those living in poverty -- may provide a lifetime benefit for their children.

The study is published online Oct. 28 and will appear in the November issue of JAMA Pediatrics.

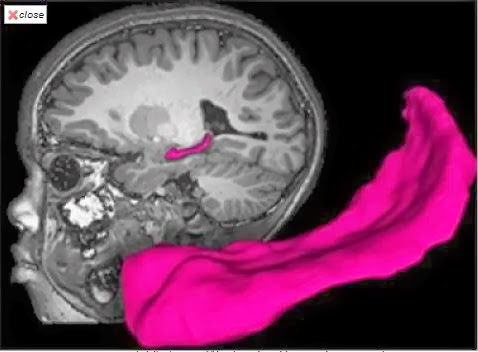

Using magnetic resonance imaging (MRI), the researchers found that poor children with parents who were not very nurturing were likely to have less gray and white matter in the brain. Gray matter is closely linked to intelligence, while white matter often is linked to the brain's ability to transmit signals between various cells and structures.

The MRI scans also revealed that two key brain structures were smaller in children who were living in poverty: the amygdala, a key structure in emotional health, and the hippocampus, an area of the brain that is critical to learning and memory.

"We've known for many years from behavioral studies that exposure to poverty is one of the most powerful predictors of poor developmental outcomes for children," said principal investigator Joan L. Luby, MD, a Washington University child psychiatrist at St. Louis Children's Hospital. "A growing number of neuroscience and brain-imaging studies recently have shown that poverty also has a negative effect on brain development.

"What's new is that our research shows the effects of poverty on the developing brain, particularly in the hippocampus, are strongly influenced by parenting and life stresses that the children experience."

Luby, a professor of psychiatry and director of the university's Early Emotional Development Program, is in the midst of a long-term study of childhood depression. As part of the Preschool Depression Study, she has been following 305 healthy and depressed kids since they were in preschool. As the children have grown, they also have received MRI scans that track brain development.

"We actually stumbled upon this finding," she said. "Initially, we thought we would have to control for the effects of poverty, but as we attempted to control for it, we realized that poverty was really driving some of the outcomes of interest, and that caused us to change our focus to poverty, which was not the initial aim of this study."

In the new study, Luby's team looked at scans from 145 children enrolled in the depression study. Some were depressed, others healthy, and others had been diagnosed with different psychiatric disorders such as ADHD (attention-deficit hyperactivity disorder). As she studied these children, Luby said it became clear that poverty and stressful life events, which often go hand in hand, were affecting brain development.

The researchers measured poverty using what's called an income-to-needs ratio, which takes a family's size and annual income into account. The current federal poverty level is $23,550 for a family of four.

Although the investigators found that poverty had a powerful impact on gray matter, white matter, hippocampal and amygdala volumes, they found that the main driver of changes among poor children in the volume of the hippocampus was not lack of money but the extent to which poor parents nurture their children. The hippocampus is a key brain region of interest in studying the risk for impairments.

Luby's team rated nurturing using observations made by the researchers -- who were unaware of characteristics such as income level or whether a child had a psychiatric diagnosis -- when the children came to the clinic for an appointment. And on one of the clinic visits, the researchers rated parental nurturing using a test of the child's impatience and of a parent's patience with that child.

While waiting to see a health professional, a child was given a gift-wrapped package, and that child's parent or caregiver was given paperwork to fill out. The child, meanwhile, was told that s/he could not open the package until the caregiver completed the paperwork, a task that researchers estimated would take about 10 minutes.

Luby's team found that parents living in poverty appeared more stressed and less able to nurture their children during that exercise. In cases where poor parents were rated as good nurturers, the children were less likely to exhibit the same anatomical changes in the brain as poor children with less nurturing parents.

"Parents can be less emotionally responsive for a whole host of reasons," Luby said. "They may work two jobs or regularly find themselves trying to scrounge together money for food. Perhaps they live in an unsafe environment. They may be facing many stresses, and some don't have the capacity to invest in supportive parenting as much as parents who don't have to live in the midst of those adverse circumstances."

The researchers also found that poorer children were more likely to experience stressful life events, which can influence brain development. Anything from moving to a new house to changing schools to having parents who fight regularly to the death of a loved one is considered a stressful life event.

Luby believes this study could provide policymakers with at least a partial answer to the question of what it is about poverty that can be so detrimental to a child's long-term developmental outcome. Because it appears that a nurturing parent or caregiver may prevent some of the changes in brain anatomy that this study identified, Luby said it is vital that society invest in public health prevention programs that target parental nurturing skills. She suggested that a key next step would be to determine if there are sensitive developmental periods when interventions with parents might have the most powerful impact.

"Children who experience positive caregiver support don't necessarily experience the developmental, cognitive and emotional problems that can affect children who don't receive as much nurturing, and that is tremendously important," Luby said. "This study gives us a feasible, tangible target with the suggestion that early interventions that focus on parenting may provide a tremendous payoff."

Among children living in poverty, the researchers identified changes in the brain that can lead to lifelong problems like depression, learning difficulties and limitations in the ability to cope with stress. The study showed that the extent of those changes was influenced strongly by whether parents were nurturing.

The good news, according to the researchers, is that a nurturing home life may offset some of the negative changes in brain anatomy among poor children. And the findings suggest that teaching nurturing skills to parents -- particularly those living in poverty -- may provide a lifetime benefit for their children.

The study is published online Oct. 28 and will appear in the November issue of JAMA Pediatrics.

Using magnetic resonance imaging (MRI), the researchers found that poor children with parents who were not very nurturing were likely to have less gray and white matter in the brain. Gray matter is closely linked to intelligence, while white matter often is linked to the brain's ability to transmit signals between various cells and structures.

The MRI scans also revealed that two key brain structures were smaller in children who were living in poverty: the amygdala, a key structure in emotional health, and the hippocampus, an area of the brain that is critical to learning and memory.

"We've known for many years from behavioral studies that exposure to poverty is one of the most powerful predictors of poor developmental outcomes for children," said principal investigator Joan L. Luby, MD, a Washington University child psychiatrist at St. Louis Children's Hospital. "A growing number of neuroscience and brain-imaging studies recently have shown that poverty also has a negative effect on brain development.

"What's new is that our research shows the effects of poverty on the developing brain, particularly in the hippocampus, are strongly influenced by parenting and life stresses that the children experience."

Luby, a professor of psychiatry and director of the university's Early Emotional Development Program, is in the midst of a long-term study of childhood depression. As part of the Preschool Depression Study, she has been following 305 healthy and depressed kids since they were in preschool. As the children have grown, they also have received MRI scans that track brain development.

"We actually stumbled upon this finding," she said. "Initially, we thought we would have to control for the effects of poverty, but as we attempted to control for it, we realized that poverty was really driving some of the outcomes of interest, and that caused us to change our focus to poverty, which was not the initial aim of this study."

In the new study, Luby's team looked at scans from 145 children enrolled in the depression study. Some were depressed, others healthy, and others had been diagnosed with different psychiatric disorders such as ADHD (attention-deficit hyperactivity disorder). As she studied these children, Luby said it became clear that poverty and stressful life events, which often go hand in hand, were affecting brain development.

The researchers measured poverty using what's called an income-to-needs ratio, which takes a family's size and annual income into account. The current federal poverty level is $23,550 for a family of four.

Although the investigators found that poverty had a powerful impact on gray matter, white matter, hippocampal and amygdala volumes, they found that the main driver of changes among poor children in the volume of the hippocampus was not lack of money but the extent to which poor parents nurture their children. The hippocampus is a key brain region of interest in studying the risk for impairments.

Luby's team rated nurturing using observations made by the researchers -- who were unaware of characteristics such as income level or whether a child had a psychiatric diagnosis -- when the children came to the clinic for an appointment. And on one of the clinic visits, the researchers rated parental nurturing using a test of the child's impatience and of a parent's patience with that child.

While waiting to see a health professional, a child was given a gift-wrapped package, and that child's parent or caregiver was given paperwork to fill out. The child, meanwhile, was told that s/he could not open the package until the caregiver completed the paperwork, a task that researchers estimated would take about 10 minutes.

Luby's team found that parents living in poverty appeared more stressed and less able to nurture their children during that exercise. In cases where poor parents were rated as good nurturers, the children were less likely to exhibit the same anatomical changes in the brain as poor children with less nurturing parents.

"Parents can be less emotionally responsive for a whole host of reasons," Luby said. "They may work two jobs or regularly find themselves trying to scrounge together money for food. Perhaps they live in an unsafe environment. They may be facing many stresses, and some don't have the capacity to invest in supportive parenting as much as parents who don't have to live in the midst of those adverse circumstances."

The researchers also found that poorer children were more likely to experience stressful life events, which can influence brain development. Anything from moving to a new house to changing schools to having parents who fight regularly to the death of a loved one is considered a stressful life event.

Luby believes this study could provide policymakers with at least a partial answer to the question of what it is about poverty that can be so detrimental to a child's long-term developmental outcome. Because it appears that a nurturing parent or caregiver may prevent some of the changes in brain anatomy that this study identified, Luby said it is vital that society invest in public health prevention programs that target parental nurturing skills. She suggested that a key next step would be to determine if there are sensitive developmental periods when interventions with parents might have the most powerful impact.

"Children who experience positive caregiver support don't necessarily experience the developmental, cognitive and emotional problems that can affect children who don't receive as much nurturing, and that is tremendously important," Luby said. "This study gives us a feasible, tangible target with the suggestion that early interventions that focus on parenting may provide a tremendous payoff."

Smart Neurons: Single Neuronal Dendrites Can Perform Computations

Posted Under:

When you look at the hands of a clock or the streets on a map, your brain is effortlessly performing computations that tell you about the orientation of these objects. New research by UCL scientists has shown that these computations can be carried out by the microscopic branches of neurons known as dendrites, which are the receiving elements of neurons.

The study, published today (Sunday) in Nature and carried out by researchers based at the Wolfson Institute for Biomedical Research at UCL, the MRC Laboratory for Molecular Biology in Cambridge and the University of North Carolina at Chapel Hill, examined neurons in areas of the mouse brain which are responsible for processing visual input from the eyes. The scientists achieved an important breakthrough: they succeeded in making incredibly challenging electrical and optical recordings directly from the tiny dendrites of neurons in the intact brain while the brain was processing visual information.

These recordings revealed that visual stimulation produces specific electrical signals in the dendrites -- bursts of spikes -- which are tuned to the properties of the visual stimulus.

The results challenge the widely held view that this kind of computation is achieved only by large numbers of neurons working together, and demonstrate how the basic components of the brain are exceptionally powerful computing devices in their own right.

Senior author Professor Michael Hausser commented: "This work shows that dendrites, long thought to simply 'funnel' incoming signals towards the soma, instead play a key role in sorting and interpreting the enormous barrage of inputs received by the neuron. Dendrites thus act as miniature computing devices for detecting and amplifying specific types of input.

"This new property of dendrites adds an important new element to the "toolkit" for computation in the brain. This kind of dendritic processing is likely to be widespread across many brain areas and indeed many different animal species, including humans."

Funding for this study was provided by the Gatsby Charitable Foundation, the Wellcome Trust, and the European Research Council, as well as the Human Frontier Science Program, the Klingenstein Foundation, Helen Lyng White, the Royal Society, and the Medical Research Council.

The study, published today (Sunday) in Nature and carried out by researchers based at the Wolfson Institute for Biomedical Research at UCL, the MRC Laboratory for Molecular Biology in Cambridge and the University of North Carolina at Chapel Hill, examined neurons in areas of the mouse brain which are responsible for processing visual input from the eyes. The scientists achieved an important breakthrough: they succeeded in making incredibly challenging electrical and optical recordings directly from the tiny dendrites of neurons in the intact brain while the brain was processing visual information.

These recordings revealed that visual stimulation produces specific electrical signals in the dendrites -- bursts of spikes -- which are tuned to the properties of the visual stimulus.

The results challenge the widely held view that this kind of computation is achieved only by large numbers of neurons working together, and demonstrate how the basic components of the brain are exceptionally powerful computing devices in their own right.

Senior author Professor Michael Hausser commented: "This work shows that dendrites, long thought to simply 'funnel' incoming signals towards the soma, instead play a key role in sorting and interpreting the enormous barrage of inputs received by the neuron. Dendrites thus act as miniature computing devices for detecting and amplifying specific types of input.

"This new property of dendrites adds an important new element to the "toolkit" for computation in the brain. This kind of dendritic processing is likely to be widespread across many brain areas and indeed many different animal species, including humans."

Funding for this study was provided by the Gatsby Charitable Foundation, the Wellcome Trust, and the European Research Council, as well as the Human Frontier Science Program, the Klingenstein Foundation, Helen Lyng White, the Royal Society, and the Medical Research Council.

Need Different Types of Tissue? Just Print Them!

Posted Under:

What sounds like a dream of the future has already been the subject of research for a few years: simply printing out tissue and organs. Now scientists have further refined the technology and are able to produce various tissue types.

The recent organ transplant scandals have only made the problem worse. According to the German Organ Transplantation Foundation (DSO), the number of organ donors in the first half of 2013 has declined more than 18 percent in comparison to the same period the previous year. At the same time, one can assume that the demand in the next years will continuously rise, because we continue to age and field of transplantation medicine is continuously advancing. Many critical illnesses can already be successfully treated today by replacing cells, tissue, or organs. Government, industry, and the research establishment have therefore been working hard for some time to improve methods and procedures for artificially producing tissue. This is how the gap in supply is supposed to be closed.

Bio-ink made from living cells

One technology might assume a decisive role in this effort, one that we are all familiar with from the office, and that most of us would certainly not immediately connect with the production of artificial tissue: the inkjet printer. Scientists of the Fraunhofer Institute for Interfacial Engineering and Biotechnology (IGB) in Stuttgart have succeeded in deve- loping suitable bio-inks for this printing technology. The transparent liquids consist of components from the natural tissue matrix and living cells. The substance is based on a well known biological material: gelatin. Gelatin is derived from collagen, the main constituent of native tissue. The researchers have chemically modified the gelling behavior of the gelatin to adapt the biological molecules for printing. Instead of gelling like unmodified gelatin, the bio-inks remain fluid during printing. Only after they are irradiated with UV light, they crosslink and cure to form hydrogels. These are polymers containing a huge amount of water (just like native tissue), but which are stable in aqueous environments and when being warmed up to physiological 37°C. The researchers can control the chemical modification of the biological molecules so that the resulting gels have differing strengths and swelling characteristics. The properties of natural tissue can therefore be imitated -- from solid cartilage to soft adipose tissue.

In Stuttgart synthetic raw materials are printed as well that can serve as substitutes for the extracellular matrix. For example a system that cures to a hydrogel devoid of by-products, and can be immediately populated with genuine cells. "We are concentrating at the moment on the 'natural' variant. That way we remain very close to the original material. Even if the potential for synthetic hydrogels is big, we still need to learn a fair amount about the interactions between the artificial substances and cells or natural tissue. Our biomolecule-based variants provide the cells with a natural environment instead, and therefore can promote the self-organizing behavior of the printed cells to form a functional tissue model," explains Dr. Kirsten Borchers in describing the approach at IGB.

The printers at the labs in Stuttgart have a lot in common with conventional office printers: the ink reservoirs and jets are all the same. The differences are discovered only under close inspection. For example, the heater on the ink container with which the right temperature of the bio-inks is set. The number of jets and tanks is smaller than in the office counterpart as well. "We would like to increase the number of these in cooperation with industry and other Fraunhofer Institutes in order to simultaneously print using various inks with different cells and matrices. This way we can come closer to replicating complex structures and different types of tissue," says Borchers.

The big challenge at the moment is to produce vascularized tissue. This means tissue that has its own system of blood vessels through which the tissue can be provided with nutrients. IGB is working on this jointly with other partners under Project ArtiVasc 3D, supported by the European Union. The core of this project is a technology platform to generate fine blood vessels from synthetic materials and thereby create for the first time artificial skin with its subcutaneous adipose tissue. "This step is very important for printing tissue or entire organs in the future. Only once we are successful in producing tissue that can be nourished through a system of blood vessels can printing larger tissue structures become feasible," says Borchers in closing. She will be exhibiting the IGB bioinks at Biotechnica in Hanover, 8-10 October 2013 (Hall 9, Booth E09).

The recent organ transplant scandals have only made the problem worse. According to the German Organ Transplantation Foundation (DSO), the number of organ donors in the first half of 2013 has declined more than 18 percent in comparison to the same period the previous year. At the same time, one can assume that the demand in the next years will continuously rise, because we continue to age and field of transplantation medicine is continuously advancing. Many critical illnesses can already be successfully treated today by replacing cells, tissue, or organs. Government, industry, and the research establishment have therefore been working hard for some time to improve methods and procedures for artificially producing tissue. This is how the gap in supply is supposed to be closed.

Bio-ink made from living cells

One technology might assume a decisive role in this effort, one that we are all familiar with from the office, and that most of us would certainly not immediately connect with the production of artificial tissue: the inkjet printer. Scientists of the Fraunhofer Institute for Interfacial Engineering and Biotechnology (IGB) in Stuttgart have succeeded in deve- loping suitable bio-inks for this printing technology. The transparent liquids consist of components from the natural tissue matrix and living cells. The substance is based on a well known biological material: gelatin. Gelatin is derived from collagen, the main constituent of native tissue. The researchers have chemically modified the gelling behavior of the gelatin to adapt the biological molecules for printing. Instead of gelling like unmodified gelatin, the bio-inks remain fluid during printing. Only after they are irradiated with UV light, they crosslink and cure to form hydrogels. These are polymers containing a huge amount of water (just like native tissue), but which are stable in aqueous environments and when being warmed up to physiological 37°C. The researchers can control the chemical modification of the biological molecules so that the resulting gels have differing strengths and swelling characteristics. The properties of natural tissue can therefore be imitated -- from solid cartilage to soft adipose tissue.

In Stuttgart synthetic raw materials are printed as well that can serve as substitutes for the extracellular matrix. For example a system that cures to a hydrogel devoid of by-products, and can be immediately populated with genuine cells. "We are concentrating at the moment on the 'natural' variant. That way we remain very close to the original material. Even if the potential for synthetic hydrogels is big, we still need to learn a fair amount about the interactions between the artificial substances and cells or natural tissue. Our biomolecule-based variants provide the cells with a natural environment instead, and therefore can promote the self-organizing behavior of the printed cells to form a functional tissue model," explains Dr. Kirsten Borchers in describing the approach at IGB.

The printers at the labs in Stuttgart have a lot in common with conventional office printers: the ink reservoirs and jets are all the same. The differences are discovered only under close inspection. For example, the heater on the ink container with which the right temperature of the bio-inks is set. The number of jets and tanks is smaller than in the office counterpart as well. "We would like to increase the number of these in cooperation with industry and other Fraunhofer Institutes in order to simultaneously print using various inks with different cells and matrices. This way we can come closer to replicating complex structures and different types of tissue," says Borchers.

The big challenge at the moment is to produce vascularized tissue. This means tissue that has its own system of blood vessels through which the tissue can be provided with nutrients. IGB is working on this jointly with other partners under Project ArtiVasc 3D, supported by the European Union. The core of this project is a technology platform to generate fine blood vessels from synthetic materials and thereby create for the first time artificial skin with its subcutaneous adipose tissue. "This step is very important for printing tissue or entire organs in the future. Only once we are successful in producing tissue that can be nourished through a system of blood vessels can printing larger tissue structures become feasible," says Borchers in closing. She will be exhibiting the IGB bioinks at Biotechnica in Hanover, 8-10 October 2013 (Hall 9, Booth E09).

Pressure in the Left Heart - Part 2

Posted Under:

Watch the pressure in the left heart go up and down with every heart beat! He is a pediatric infectious disease physician. These videos do not provide medical advice and are for informational purposes only. The videos are not intended to be a substitute for professional medical advice, diagnosis or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read or seen in any video.

Pressure in the Left Heart - Part 1

Posted Under:

Watch the pressure in the left heart go up and down with every heart beat! Rishi is a pediatric infectious disease physician and works at Khan Academy. These videos do not provide medical advice and are for informational purposes only. The videos are not intended to be a substitute for professional medical advice, diagnosis or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read or seen in any video.

Cardiac Cycle Broken Down

Posted Under:

This is about Cardiac Cycle Broken Down!You can find this video and other helpful videos/materials (practice sheet and questions) on my website: www.profroofs.com The Wiggers Diagram of the Cardiac Cycle is usually a difficult diagram to first understand. In this video I relate it to an analogy of a haunted house. I walk you first through the basic ideas you already know to the point of understanding the detailed diagram. Additionally, I give a few practice questions at the end. I hope this is helpful. If there are any questions then contact me here,mail2jenakan2@gmail.com

Cardiac Cycle

Posted Under:

This is about Cardiac Cycle! Hello freinds you can read or watch about cardiac cycle description of the events occuring during ventricular systole and diastole, and a discussion of cardiac output.

Gravitational Waves Help Us Understand Black-Hole Weight Gain

Posted Under:

Supermassive black holes: every large galaxy's got one. But here's a real conundrum: how did they grow so big?

A paper in today's issue of Science pits the front-running ideas about the growth of supermassive black holes against observational data -- a limit on the strength of gravitational waves, obtained with CSIRO's Parkes radio telescope in eastern Australia.

"This is the first time we've been able to use information about gravitational waves to study another aspect of the Universe -- the growth of massive black holes," co-author Dr Ramesh Bhat from the Curtin University node of the International Centre for Radio Astronomy Research (ICRAR) said.

"Black holes are almost impossible to observe directly, but armed with this powerful new tool we're in for some exciting times in astronomy. One model for how black holes grow has already been discounted, and now we're going to start looking at the others."

The study was jointly led by Dr Ryan Shannon, a Postdoctoral Fellow with CSIRO, and Mr Vikram Ravi, a PhD student co-supervised by the University of Melbourne and CSIRO.

Einstein predicted gravitational waves -- ripples in space-time, generated by massive bodies changing speed or direction, bodies like pairs of black holes orbiting each other.

When galaxies merge, their central black holes are doomed to meet. They first waltz together then enter a desperate embrace and merge.

"When the black holes get close to meeting they emit gravitational waves at just the frequency that we should be able to detect," Dr Bhat said.

Played out again and again across the Universe, such encounters create a background of gravitational waves, like the noise from a restless crowd.

Astronomers have been searching for gravitational waves with the Parkes radio telescope and a set of 20 small, spinning stars called pulsars.

Pulsars act as extremely precise clocks in space. The arrival time of their pulses on Earth are measured with exquisite precision, to within a tenth of a microsecond.

When the waves roll through an area of space-time, they temporarily swell or shrink the distances between objects in that region, altering the arrival time of the pulses on Earth.

The Parkes Pulsar Timing Array (PPTA), and an earlier collaboration between CSIRO and Swinburne University, together provide nearly 20 years worth of timing data. This isn't long enough to detect gravitational waves outright, but the team say they're now in the right ballpark.

"The PPTA results are showing us how low the background rate of gravitational waves is," said Dr Bhat.

"The strength of the gravitational wave background depends on how often supermassive black holes spiral together and merge, how massive they are, and how far away they are. So if the background is low, that puts a limit on one or more of those factors."

Armed with the PPTA data, the researchers tested four models of black-hole growth. They effectively ruled out black holes gaining mass only through mergers, but the other three models are still a possibility.

Dr Bhat also said the Curtin University-led Murchison Widefield Array (MWA) radio telescope will be used to support the PPTA project in the future.

"The MWA's large view of the sky can be exploited to observe many pulsars at once, adding valuable data to the PPTA project as well as collecting interesting information on pulsars and their properties," Dr Bhat said.

Brain May Flush out Toxins During Sleep; Sleep Clears Brain of Molecules Associated With Neurodegeneration: Study

Posted Under:

Hey Do you know?, A good night's rest may literally clear the mind. Using mice, researchers showed for the first time that the space between brain cells may increase during sleep, allowing the brain to flush out toxins that build up during waking hours. These results suggest a new role for sleep in health and disease. The study was funded by the National Institute of Neurological Disorders and Stroke (NINDS), part of the NIH.

Sleep changes the cellular structure of the brain. It appears to be a completely different state," said Maiken Nedergaard, M.D., D.M.Sc., co-director of the Center for Translational Neuromedicine at the University of Rochester Medical Center in New York, and a leader of the study.

For centuries, scientists and philosophers have wondered why people sleep and how it affects the brain. Only recently have scientists shown that sleep is important for storing memories. In this study, Dr. Nedergaard and her colleagues unexpectedly found that sleep may be also be the period when the brain cleanses itself of toxic molecules.

Their results, published in Science, show that during sleep a plumbing system called the glymphatic system may open, letting fluid flow rapidly through the brain. Dr. Nedergaard's lab recently discovered the glymphatic system helps control the flow of cerebrospinal fluid (CSF), a clear liquid surrounding the brain and spinal cord.

"It's as if Dr. Nedergaard and her colleagues have uncovered a network of hidden caves and these exciting results highlight the potential importance of the network in normal brain function," said Roderick Corriveau, Ph.D., a program director at NINDS.

Initially the researchers studied the system by injecting dye into the CSF of mice and watching it flow through their brains while simultaneously monitoring electrical brain activity. The dye flowed rapidly when the mice were unconscious, either asleep or anesthetized. In contrast, the dye barely flowed when the same mice were awake.

"We were surprised by how little flow there was into the brain when the mice were awake," said Dr. Nedergaard. "It suggested that the space between brain cells changed greatly between conscious and unconscious states."

To test this idea, the researchers used electrodes inserted into the brain to directly measure the space between brain cells. They found that the space inside the brains increased by 60 percent when the mice were asleep or anesthetized.

"These are some dramatic changes in extracellular space," said Charles Nicholson, Ph.D., a professor at New York University's Langone Medical Center and an expert in measuring the dynamics of brain fluid flow and how it influences nerve cell communication.

Certain brain cells, called glia, control flow through the glymphatic system by shrinking or swelling. Noradrenaline is an arousing hormone that is also known to control cell volume. Similar to using anesthesia, treating awake mice with drugs that block noradrenaline induced unconsciousness and increased brain fluid flow and the space between cells, further supporting the link between the glymphatic system and consciousness.

Previous studies suggest that toxic molecules involved in neurodegenerative disorders accumulate in the space between brain cells. In this study, the researchers tested whether the glymphatic system controls this by injecting mice with labeled beta-amyloid, a protein associated with Alzheimer's disease, and measuring how long it lasted in their brains when they were asleep or awake. Beta-amyloid disappeared faster in mice brains when the mice were asleep, suggesting sleep normally clears toxic molecules from the brain.

"These results may have broad implications for multiple neurological disorders," said Jim Koenig, Ph.D., a program director at NINDS. "This means the cells regulating the glymphatic system may be new targets for treating a range of disorders."

The results may also highlight the importance of sleep.

"We need sleep. It cleans up the brain," said Dr. Nedergaard.

Sleep changes the cellular structure of the brain. It appears to be a completely different state," said Maiken Nedergaard, M.D., D.M.Sc., co-director of the Center for Translational Neuromedicine at the University of Rochester Medical Center in New York, and a leader of the study.

For centuries, scientists and philosophers have wondered why people sleep and how it affects the brain. Only recently have scientists shown that sleep is important for storing memories. In this study, Dr. Nedergaard and her colleagues unexpectedly found that sleep may be also be the period when the brain cleanses itself of toxic molecules.

Their results, published in Science, show that during sleep a plumbing system called the glymphatic system may open, letting fluid flow rapidly through the brain. Dr. Nedergaard's lab recently discovered the glymphatic system helps control the flow of cerebrospinal fluid (CSF), a clear liquid surrounding the brain and spinal cord.

"It's as if Dr. Nedergaard and her colleagues have uncovered a network of hidden caves and these exciting results highlight the potential importance of the network in normal brain function," said Roderick Corriveau, Ph.D., a program director at NINDS.

Initially the researchers studied the system by injecting dye into the CSF of mice and watching it flow through their brains while simultaneously monitoring electrical brain activity. The dye flowed rapidly when the mice were unconscious, either asleep or anesthetized. In contrast, the dye barely flowed when the same mice were awake.

"We were surprised by how little flow there was into the brain when the mice were awake," said Dr. Nedergaard. "It suggested that the space between brain cells changed greatly between conscious and unconscious states."

To test this idea, the researchers used electrodes inserted into the brain to directly measure the space between brain cells. They found that the space inside the brains increased by 60 percent when the mice were asleep or anesthetized.

"These are some dramatic changes in extracellular space," said Charles Nicholson, Ph.D., a professor at New York University's Langone Medical Center and an expert in measuring the dynamics of brain fluid flow and how it influences nerve cell communication.

Certain brain cells, called glia, control flow through the glymphatic system by shrinking or swelling. Noradrenaline is an arousing hormone that is also known to control cell volume. Similar to using anesthesia, treating awake mice with drugs that block noradrenaline induced unconsciousness and increased brain fluid flow and the space between cells, further supporting the link between the glymphatic system and consciousness.

Previous studies suggest that toxic molecules involved in neurodegenerative disorders accumulate in the space between brain cells. In this study, the researchers tested whether the glymphatic system controls this by injecting mice with labeled beta-amyloid, a protein associated with Alzheimer's disease, and measuring how long it lasted in their brains when they were asleep or awake. Beta-amyloid disappeared faster in mice brains when the mice were asleep, suggesting sleep normally clears toxic molecules from the brain.

"These results may have broad implications for multiple neurological disorders," said Jim Koenig, Ph.D., a program director at NINDS. "This means the cells regulating the glymphatic system may be new targets for treating a range of disorders."

The results may also highlight the importance of sleep.

"We need sleep. It cleans up the brain," said Dr. Nedergaard.

Researchers Advance Toward Engineering 'Wildly New Genome'

Posted Under:

In two parallel projects, researchers have created new genomes inside the bacterium E. coli in ways that test the limits of genetic reprogramming and open new possibilities for increasing flexibility, productivity and safety in biotechnology.

In one project, researchers created a novel genome -- the first-ever entirely genomically recoded organism -- by replacing all 321 instances of a specific "genetic three-letter word," called a codon, throughout the organism's entire genome with a word of supposedly identical meaning. The researchers then reintroduced a reprogramed version of the original word (with a new meaning, a new amino acid) into the bacteria, expanding the bacterium's vocabulary and allowing it to produce proteins that do not normally occur in nature.

In the second project, the researchers removed every occurrence of 13 different codons across 42 separate E. coli genes, using a different organism for each gene, and replaced them with other codons of the same function. When they were done, 24 percent of the DNA across the 42 targeted genes had been changed, yet the proteins the genes produced remained identical to those produced by the original genes.

"The first project is saying that we can take one codon, completely remove it from the genome, then successfully reassign its function," said Marc Lajoie, a Harvard Medical School graduate student in the lab of George Church. "For the second project we asked, 'OK, we've changed this one codon, how many others can we change?'"

Of the 13 codons chosen for the project, all could be changed.

"That leaves open the possibility that we could potentially replace any or all of those 13 codons throughout the entire genome," Lajoie said.

The results of these two projects appear today in Science. The work was led by Church, Robert Winthrop Professor of Genetics at Harvard Medical School and founding core faculty member at the Wyss Institute for Biologically Inspired Engineering. Farren Isaacs, assistant professor of molecular, cellular, and developmental biology at Yale School of Medicine, is co-senior author on the first study.

Toward safer, more productive, more versatile biotech

Recoded genomes can confer protection against viruses -- which limit productivity in the biotech industry -- and help prevent the spread of potentially dangerous genetically engineered traits to wild organisms.

"In science we talk a lot about the 'what' and the 'how' of things, but in this case, the 'why' is very important," Church said, explaining how this project is part of an ongoing effort to improve the safety, productivity and flexibility of biotechnology.

"These results might also open a whole new chemical toolbox for biotech production," said Isaacs. "For example, adding durable polymers to a therapeutic molecule could allow it to function longer in the human bloodstream."

But to have such an impact, the researchers said, large swaths of the genome need to be changed all at once.

"If we make a few changes that make the microbe a little more resistant to a virus, the virus is going to compensate. It becomes a back and forth battle," Church said. "But if we take the microbe offline and make a whole bunch of changes, when we bring it back and show it to the virus, the virus is going to say 'I give up.' No amount of diversity in any reasonable natural virus population is going to be enough to compensate for this wildly new genome."

In the first study, with just a single codon removed, the genomically recoded organism showed increased resistance to viral infection. The same potential "wildly new genome" would make it impossible for engineered genes to escape into wild populations, Church said, because they would be incompatible with natural genomes. This could be of considerable benefit with strains engineered for drug or pesticide resistance, for example. What's more, incorporating rare, non-standard amino acids could ensure strains only survive in a laboratory environment.

Engineering and evolution

Since a single genetic flaw can spell death for an organism, the challenge of managing a series of hundreds of specific changes was daunting, the researchers said. In both projects, the researchers paid particular attention to developing a methodical approach to planning and implementing changes and troubleshooting the results.

"We wanted to develop the ability to efficiently build the desired genome and to very quickly identify any problems -- from design flaws or from undesired mutations -- and develop workarounds," Lajoie said.

The team relied on number oftechnologies developed in the Church lab and the Wyss Institute and with partners in academia and industry, including next-generation sequencing tools, DNA synthesis on a chip, and MAGE and CAGE genome editing tools. But one of the most important tools they used was the power of natural selection, the researchers added.

"When an engineering team designs a new cellphone, it's a huge investment of time and money. They really want that cell phone to work," Church said. "With E. coli we can make a few billion prototypes with many different genomes, and let the best strain win. That's the awesome power of evolution."

In one project, researchers created a novel genome -- the first-ever entirely genomically recoded organism -- by replacing all 321 instances of a specific "genetic three-letter word," called a codon, throughout the organism's entire genome with a word of supposedly identical meaning. The researchers then reintroduced a reprogramed version of the original word (with a new meaning, a new amino acid) into the bacteria, expanding the bacterium's vocabulary and allowing it to produce proteins that do not normally occur in nature.

In the second project, the researchers removed every occurrence of 13 different codons across 42 separate E. coli genes, using a different organism for each gene, and replaced them with other codons of the same function. When they were done, 24 percent of the DNA across the 42 targeted genes had been changed, yet the proteins the genes produced remained identical to those produced by the original genes.

"The first project is saying that we can take one codon, completely remove it from the genome, then successfully reassign its function," said Marc Lajoie, a Harvard Medical School graduate student in the lab of George Church. "For the second project we asked, 'OK, we've changed this one codon, how many others can we change?'"

Of the 13 codons chosen for the project, all could be changed.

"That leaves open the possibility that we could potentially replace any or all of those 13 codons throughout the entire genome," Lajoie said.

The results of these two projects appear today in Science. The work was led by Church, Robert Winthrop Professor of Genetics at Harvard Medical School and founding core faculty member at the Wyss Institute for Biologically Inspired Engineering. Farren Isaacs, assistant professor of molecular, cellular, and developmental biology at Yale School of Medicine, is co-senior author on the first study.

Toward safer, more productive, more versatile biotech

Recoded genomes can confer protection against viruses -- which limit productivity in the biotech industry -- and help prevent the spread of potentially dangerous genetically engineered traits to wild organisms.

"In science we talk a lot about the 'what' and the 'how' of things, but in this case, the 'why' is very important," Church said, explaining how this project is part of an ongoing effort to improve the safety, productivity and flexibility of biotechnology.

"These results might also open a whole new chemical toolbox for biotech production," said Isaacs. "For example, adding durable polymers to a therapeutic molecule could allow it to function longer in the human bloodstream."

But to have such an impact, the researchers said, large swaths of the genome need to be changed all at once.

"If we make a few changes that make the microbe a little more resistant to a virus, the virus is going to compensate. It becomes a back and forth battle," Church said. "But if we take the microbe offline and make a whole bunch of changes, when we bring it back and show it to the virus, the virus is going to say 'I give up.' No amount of diversity in any reasonable natural virus population is going to be enough to compensate for this wildly new genome."

In the first study, with just a single codon removed, the genomically recoded organism showed increased resistance to viral infection. The same potential "wildly new genome" would make it impossible for engineered genes to escape into wild populations, Church said, because they would be incompatible with natural genomes. This could be of considerable benefit with strains engineered for drug or pesticide resistance, for example. What's more, incorporating rare, non-standard amino acids could ensure strains only survive in a laboratory environment.

Engineering and evolution

Since a single genetic flaw can spell death for an organism, the challenge of managing a series of hundreds of specific changes was daunting, the researchers said. In both projects, the researchers paid particular attention to developing a methodical approach to planning and implementing changes and troubleshooting the results.

"We wanted to develop the ability to efficiently build the desired genome and to very quickly identify any problems -- from design flaws or from undesired mutations -- and develop workarounds," Lajoie said.

The team relied on number oftechnologies developed in the Church lab and the Wyss Institute and with partners in academia and industry, including next-generation sequencing tools, DNA synthesis on a chip, and MAGE and CAGE genome editing tools. But one of the most important tools they used was the power of natural selection, the researchers added.

"When an engineering team designs a new cellphone, it's a huge investment of time and money. They really want that cell phone to work," Church said. "With E. coli we can make a few billion prototypes with many different genomes, and let the best strain win. That's the awesome power of evolution."

Most Distant Gravitational Lens Helps Weigh Galaxies

Posted Under:

An international team of astronomers has found the most distant gravitational lens yet -- a galaxy that, as predicted by Albert Einstein's general theory of relativity, deflects and intensifies the light of an even more distant object. The discovery provides a rare opportunity to directly measure the mass of a distant galaxy. But it also poses a mystery: lenses of this kind should be exceedingly rare. Given this and other recent finds, astronomers either have been phenomenally lucky -- or, more likely, they have underestimated substantially the number of small, very young galaxies in the early Universe.

Light is affected by gravity, and light passing a distant galaxy will be deflected as a result. Since the first find in 1979, numerous such gravitational lenses have been discovered. In addition to providing tests of Einstein's theory of general relativity, gravitational lenses have proved to be valuable tools. Notably, one can determine the mass of the matter that is bending the light -- including the mass of the still-enigmatic dark matter, which does not emit or absorb light and can only be detected via its gravitational effects. The lens also magnifies the background light source, acting as a "natural telescope" that allows astronomers a more detailed look at distant galaxies than is normally possible.

Gravitational lenses consist of two objects: one is further away and supplies the light, and the other, the lensing mass or gravitational lens, which sits between us and the distant light source, and whose gravity deflects the light. When the observer, the lens, and the distant light source are precisely aligned, the observer sees an Einstein ring: a perfect circle of light that is the projected and greatly magnified image of the distant light source.

Now, astronomers have found the most distant gravitational lens yet. Lead author Arjen van der Wel (Max Planck Institute for Astronomy, Heidelberg, Germany) explains: "The discovery was completely by chance. I had been reviewing observations from an earlier project when I noticed a galaxy that was decidedly odd. It looked like an extremely young galaxy, but it seemed to be at a much larger distance than expected. It shouldn't even have been part of our observing programme!"

Van der Wel wanted to find out more and started to study images taken with the Hubble Space Telescope as part of the CANDELS and COSMOS surveys. In these pictures the mystery object looked like an old galaxy, a plausible target for the original observing programme, but with some irregular features which, he suspected, meant that he was looking at a gravitational lens. Combining the available images and removing the haze of the lensing galaxy's collection of stars, the result was very clear: an almost perfect Einstein ring, indicating a gravitational lens with very precise alignment of the lens and the background light source [1].

The lensing mass is so distant that the light, after deflection, has travelled 9.4 billion years to reach us [2]. Not only is this a new record, the object also serves an important purpose: the amount of distortion caused by the lensing galaxy allows a direct measurement of its mass. This provides an independent test for astronomers' usual methods of estimating distant galaxy masses -- which rely on extrapolation from their nearby cousins. Fortunately for astronomers, their usual methods pass the test.

But the discovery also poses a puzzle. Gravitational lenses are the result of a chance alignment. In this case, the alignment is very precise. To make matters worse, the magnified object is a starbursting dwarf galaxy: a comparatively light galaxy (it has only about 100 million solar masses in the form of stars [3]), but extremely young (about 10-40 million years old) and producing new stars at an enormous rate. The chances that such a peculiar galaxy would be gravitationally lensed is very small. Yet this is the second starbursting dwarf galaxy that has been found to be lensed. Either astronomers have been phenomenally lucky, or starbursting dwarf galaxies are much more common than previously thought, forcing astronomers to re-think their models of galaxy evolution.

Van der Wel concludes: "This has been a weird and interesting discovery. It was a completely serendipitous find, but it has the potential to start a new chapter in our description of galaxy evolution in the early Universe."

Notes

[1] The two objects are aligned to better than 0.01 arcseconds -- equivalent to a one millimetre separation at a distance of 20 kilometres.

[2] This time corresponds to a redshift z = 1.53. This can be compared with the total age of the Universe of 13.8 billion years. The previous record holder was found thirty years ago, and it took less than 8 billion years for its light to reach us (a redshift of about 1.0).

[3] For comparison, the Milky Way is a large spiral galaxy with at least one thousand times greater mass in the form of stars than this dwarf galaxy.

Light is affected by gravity, and light passing a distant galaxy will be deflected as a result. Since the first find in 1979, numerous such gravitational lenses have been discovered. In addition to providing tests of Einstein's theory of general relativity, gravitational lenses have proved to be valuable tools. Notably, one can determine the mass of the matter that is bending the light -- including the mass of the still-enigmatic dark matter, which does not emit or absorb light and can only be detected via its gravitational effects. The lens also magnifies the background light source, acting as a "natural telescope" that allows astronomers a more detailed look at distant galaxies than is normally possible.

Gravitational lenses consist of two objects: one is further away and supplies the light, and the other, the lensing mass or gravitational lens, which sits between us and the distant light source, and whose gravity deflects the light. When the observer, the lens, and the distant light source are precisely aligned, the observer sees an Einstein ring: a perfect circle of light that is the projected and greatly magnified image of the distant light source.

Now, astronomers have found the most distant gravitational lens yet. Lead author Arjen van der Wel (Max Planck Institute for Astronomy, Heidelberg, Germany) explains: "The discovery was completely by chance. I had been reviewing observations from an earlier project when I noticed a galaxy that was decidedly odd. It looked like an extremely young galaxy, but it seemed to be at a much larger distance than expected. It shouldn't even have been part of our observing programme!"

Van der Wel wanted to find out more and started to study images taken with the Hubble Space Telescope as part of the CANDELS and COSMOS surveys. In these pictures the mystery object looked like an old galaxy, a plausible target for the original observing programme, but with some irregular features which, he suspected, meant that he was looking at a gravitational lens. Combining the available images and removing the haze of the lensing galaxy's collection of stars, the result was very clear: an almost perfect Einstein ring, indicating a gravitational lens with very precise alignment of the lens and the background light source [1].

The lensing mass is so distant that the light, after deflection, has travelled 9.4 billion years to reach us [2]. Not only is this a new record, the object also serves an important purpose: the amount of distortion caused by the lensing galaxy allows a direct measurement of its mass. This provides an independent test for astronomers' usual methods of estimating distant galaxy masses -- which rely on extrapolation from their nearby cousins. Fortunately for astronomers, their usual methods pass the test.

But the discovery also poses a puzzle. Gravitational lenses are the result of a chance alignment. In this case, the alignment is very precise. To make matters worse, the magnified object is a starbursting dwarf galaxy: a comparatively light galaxy (it has only about 100 million solar masses in the form of stars [3]), but extremely young (about 10-40 million years old) and producing new stars at an enormous rate. The chances that such a peculiar galaxy would be gravitationally lensed is very small. Yet this is the second starbursting dwarf galaxy that has been found to be lensed. Either astronomers have been phenomenally lucky, or starbursting dwarf galaxies are much more common than previously thought, forcing astronomers to re-think their models of galaxy evolution.

Van der Wel concludes: "This has been a weird and interesting discovery. It was a completely serendipitous find, but it has the potential to start a new chapter in our description of galaxy evolution in the early Universe."

Notes

[1] The two objects are aligned to better than 0.01 arcseconds -- equivalent to a one millimetre separation at a distance of 20 kilometres.

[2] This time corresponds to a redshift z = 1.53. This can be compared with the total age of the Universe of 13.8 billion years. The previous record holder was found thirty years ago, and it took less than 8 billion years for its light to reach us (a redshift of about 1.0).

[3] For comparison, the Milky Way is a large spiral galaxy with at least one thousand times greater mass in the form of stars than this dwarf galaxy.

Curiosity Confirms Origins of Martian Meteorites

Posted Under:

Earth's most eminent emissary to Mars has just proven that those rare Martian visitors that sometimes drop in on Earth -- a.k.a. Martian meteorites -- really are from the Red Planet. A key new measurement of Mars' atmosphere by NASA's Curiosity rover provides the most definitive evidence yet of the origins of Mars meteorites while at the same time providing a way to rule out Martian origins of other meteorites.

The new measurement is a high-precision count of two forms of argon gas -- Argon-36 and Argon-38-accomplished by the Sample Analysis at Mars (SAM) instrument on Curiosity. These lighter and heavier forms, or isotopes, of argon exist naturally throughout the solar system. But on Mars the ratio of light to heavy argon is skewed because a lot of that planet's original atmosphere was lost to space, with the lighter form of argon being taken away more readily because it rises to the top of the atmosphere more easily and requires less energy to escape. That's left the Martian atmosphere relatively enriched in the heavier Argon-38.

Years of past analyses by Earth-bound scientists of gas bubbles trapped inside Martian meteorites had already narrowed the Martian argon ratio to between 3.6 and 4.5 (that is 3.6 to 4.5 atoms of Argon-36 to every one Argon-38) with the supposed Martian "atmospheric" value near four. Measurements by NASA's Viking landers in the 1970's put the Martian atmospheric ratio in the range of four to seven. The new SAM direct measurement on Mars now pins down the correct argon ratio at 4.2.

"We really nailed it," said Sushil Atreya of the University of Michigan, Ann Arbor, the lead author of a paper reporting the finding today in Geophysical Research Letters, a journal of the American Geophysical Union. "This direct reading from Mars settles the case with all Martian meteorites," he said.

One of the reasons scientists have been so interested in the argon ratio in Martian meteorites is that it was -- before Curiosity -- the best measure of how much atmosphere Mars has lost since the planet's earlier, wetter, warmer days billions of years ago. Figuring out the planet's atmospheric loss would enable scientists to better understand how Mars transformed from a once water-rich planet more like our own to the today's drier, colder and less hospitable world.

Had Mars held onto its entire atmosphere and its original argon, Atreya explained, its ratio of the gas would be the same as that of the Sun and Jupiter. They have so much gravity that isotopes can't preferentially escape, so their argon ratio -- which is 5.5 -- represents that of the primordial solar system.

While argon comprises only a tiny fraction of the gases lost to space from Mars, it is special because it's a noble gas. That means the gas is inert, not reacting with other elements or compounds, and therefore a more straightforward tracer of the history of the Martian atmosphere.

"Other isotopes measured by SAM on Curiosity also support the loss of atmosphere, but none so directly as argon," said Atreya. "Argon is the clearest signature of atmospheric loss because it's chemically inert and does not interact or exchange with the Martian surface or the interior. This was a key measurement that we wanted to carry out on SAM."

NASA's Jet Propulsion Laboratory, Pasadena, Calif., manages the Curiosity mission for NASA's Science Mission Directorate, Washington. The SAM investigation on the rover is managed by NASA Goddard Space Flight Center, Greenbelt, Md

The new measurement is a high-precision count of two forms of argon gas -- Argon-36 and Argon-38-accomplished by the Sample Analysis at Mars (SAM) instrument on Curiosity. These lighter and heavier forms, or isotopes, of argon exist naturally throughout the solar system. But on Mars the ratio of light to heavy argon is skewed because a lot of that planet's original atmosphere was lost to space, with the lighter form of argon being taken away more readily because it rises to the top of the atmosphere more easily and requires less energy to escape. That's left the Martian atmosphere relatively enriched in the heavier Argon-38.

Years of past analyses by Earth-bound scientists of gas bubbles trapped inside Martian meteorites had already narrowed the Martian argon ratio to between 3.6 and 4.5 (that is 3.6 to 4.5 atoms of Argon-36 to every one Argon-38) with the supposed Martian "atmospheric" value near four. Measurements by NASA's Viking landers in the 1970's put the Martian atmospheric ratio in the range of four to seven. The new SAM direct measurement on Mars now pins down the correct argon ratio at 4.2.

"We really nailed it," said Sushil Atreya of the University of Michigan, Ann Arbor, the lead author of a paper reporting the finding today in Geophysical Research Letters, a journal of the American Geophysical Union. "This direct reading from Mars settles the case with all Martian meteorites," he said.

One of the reasons scientists have been so interested in the argon ratio in Martian meteorites is that it was -- before Curiosity -- the best measure of how much atmosphere Mars has lost since the planet's earlier, wetter, warmer days billions of years ago. Figuring out the planet's atmospheric loss would enable scientists to better understand how Mars transformed from a once water-rich planet more like our own to the today's drier, colder and less hospitable world.

Had Mars held onto its entire atmosphere and its original argon, Atreya explained, its ratio of the gas would be the same as that of the Sun and Jupiter. They have so much gravity that isotopes can't preferentially escape, so their argon ratio -- which is 5.5 -- represents that of the primordial solar system.

While argon comprises only a tiny fraction of the gases lost to space from Mars, it is special because it's a noble gas. That means the gas is inert, not reacting with other elements or compounds, and therefore a more straightforward tracer of the history of the Martian atmosphere.

"Other isotopes measured by SAM on Curiosity also support the loss of atmosphere, but none so directly as argon," said Atreya. "Argon is the clearest signature of atmospheric loss because it's chemically inert and does not interact or exchange with the Martian surface or the interior. This was a key measurement that we wanted to carry out on SAM."

NASA's Jet Propulsion Laboratory, Pasadena, Calif., manages the Curiosity mission for NASA's Science Mission Directorate, Washington. The SAM investigation on the rover is managed by NASA Goddard Space Flight Center, Greenbelt, Md

Electrical System of the Heart

Posted Under:

See where the pacemaker cells start the electrical wave of depolarization, and how it gets all the way to the ventricles of the heart. Rishi is a pediatric infectious disease physician and works at Khan Academy. These videos do not provide medical advice and are for informational purposes only. The videos are not intended to be a substitute for professional medical advice, diagnosis or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read or seen in any Khan Academy video!

Lub Dub

Posted Under:

The Heart bereaths "Lub Dub"!Ever wonder why the heart sounds the way that it does? Opening and closing of heart valves makes the heart rhythm come alive with its lub dub beats... Rishi is a pediatric infectious disease physician and works at Khan Academy. These videos do not provide medical advice and are for informational purposes only. The videos are not intended to be a substitute for professional medical advice, diagnosis or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read or seen in any Khan Academy video

Flow through the Heart

Posted Under:

Flow through the Heart say about...Learn how blood flows through the heart, and understand the difference between systemic and pulmonary blood flow. Rishi is a pediatric infectious disease physician and works at Khan Academy. These videos do not provide medical advice and are for informational purposes only. The videos are not intended to be a substitute for professional medical advice, diagnosis or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition. Never disregard professional medical advice or delay in seeking it because of something you have read or seen in any Khan Academy video

Blood circulation in the heart

Posted Under:

Blood circulation in the heart! Says about how the blood circle was helding in the heart and our body.

Human heart

Posted Under:

A brief overview of the structure and function of the human heart and circulatory system. You can watch more parts of this topic here....

Researchers Sequence Non-Infiltrating Bladder Cancer Exome

Posted Under:

Bladder cancer represents a serious public health problem in many countries, especially in Spain, where 11,200 new cases are recorded every year, one of the highest rates in the world. The majority of these tumours have a good prognosis -- 70-80% five-year survival after diagnosis -- and they do not infiltrate the bladder muscle at the time of diagnosis -- in around 80% of cases.

Despite this, many of the tumours recur, requiring periodic cytoscopic tumour surveillance. This type of follow-up affects patients' quality of life, at the same time as incurring significant healthcare costs.

Researchers at the Spanish National Cancer Research Centre (CNIO), coordinated by Francisco X. Real, head of the Epithelial Carcinogenesis Group and Nuria Malats, head of the Genetic & Molecular Epidemiology Group, have carried out the first exome sequencing for non-infiltrating bladder cancer, the most frequent type of bladder cancer and the one with the highest risk of recurrence (the exome is the part of the genome that contains protein synthesis information).

The results reveal new genetic pathways involved in the disease, such as cellular division and DNA repair, as well as new genes -- not previously described -- that might be crucial for understanding its origin and evolution.

"We know very little about the biology of bladder cancer, which would be useful for classifying patients, predicting relapses and even preventing the illness," says Cristina Balbás, a predoctoral researcher in Real's laboratory who is the lead author of the study.

The work consisted of analysing the exome from 17 patients diagnosed with bladder cancer and subsequently validating the data via the study of a specific group of genes in 60 additional patients.

"We found up to 9 altered genes that hadn't been described before in this type of tumour, and of these we found that STAG2 was inactive in almost 40% of the least aggressive tumours," says Real.

The researcher adds that: "Some of these genes are involved in previously undescribed genetic pathways in bladder cancer, such as cell division and DNA repair; also, we confirmed and extended other genetic pathways that had previously been described in this cancer type, such as chromatin remodelling."

An Unknown Agent in Bladder Cancer

The STAG2 gene has been associated with cancer just over 2 years ago, although "little is known about it, and nothing about its relationship to bladder cancer," says Balbás. Previous studies suggest it participates in chromosome separation during cell division (chromosomes contain the genetic material), which is where it might be related to cancer, although it has also been associated with maintenance of DNA´s 3D structure or in gene regulation.

Contrary to what might be expected, the article reveals that tumours with an alteration in this gene frequently lack changes in the number of chromosomes, which indicates, according to Real, that "this gene participates in bladder cancer via different mechanisms than chromosome separation."

The authors have also found, by analysising tumour tissue from more than 670 patients, that alterations in STAG2 are associated, above all, with tumours from patients with a better prognosis. How and why these phenomena work still needs to be discovered but the researchers predict that "mutations in STAG2 and other additional genes that we showed to be altered could provide new therapeutic opportunities in some patient sub-groups."

Despite this, many of the tumours recur, requiring periodic cytoscopic tumour surveillance. This type of follow-up affects patients' quality of life, at the same time as incurring significant healthcare costs.

Researchers at the Spanish National Cancer Research Centre (CNIO), coordinated by Francisco X. Real, head of the Epithelial Carcinogenesis Group and Nuria Malats, head of the Genetic & Molecular Epidemiology Group, have carried out the first exome sequencing for non-infiltrating bladder cancer, the most frequent type of bladder cancer and the one with the highest risk of recurrence (the exome is the part of the genome that contains protein synthesis information).

The results reveal new genetic pathways involved in the disease, such as cellular division and DNA repair, as well as new genes -- not previously described -- that might be crucial for understanding its origin and evolution.

"We know very little about the biology of bladder cancer, which would be useful for classifying patients, predicting relapses and even preventing the illness," says Cristina Balbás, a predoctoral researcher in Real's laboratory who is the lead author of the study.

The work consisted of analysing the exome from 17 patients diagnosed with bladder cancer and subsequently validating the data via the study of a specific group of genes in 60 additional patients.

"We found up to 9 altered genes that hadn't been described before in this type of tumour, and of these we found that STAG2 was inactive in almost 40% of the least aggressive tumours," says Real.

The researcher adds that: "Some of these genes are involved in previously undescribed genetic pathways in bladder cancer, such as cell division and DNA repair; also, we confirmed and extended other genetic pathways that had previously been described in this cancer type, such as chromatin remodelling."

An Unknown Agent in Bladder Cancer

The STAG2 gene has been associated with cancer just over 2 years ago, although "little is known about it, and nothing about its relationship to bladder cancer," says Balbás. Previous studies suggest it participates in chromosome separation during cell division (chromosomes contain the genetic material), which is where it might be related to cancer, although it has also been associated with maintenance of DNA´s 3D structure or in gene regulation.

Contrary to what might be expected, the article reveals that tumours with an alteration in this gene frequently lack changes in the number of chromosomes, which indicates, according to Real, that "this gene participates in bladder cancer via different mechanisms than chromosome separation."